Is adding a permission system in Node.js desirable — or even feasible? James Snell explores the challenges, workarounds and potential payoffs of permissions in Node.

Every so often we get a vulnerability report in Node.js that highlights the fact that the platform allows any arbitrary JavaScript loaded at runtime to access system resources with the full rights as the user.

For example, the npm package manager that ships with Node.js includes a utility called npx. This is a simple tool designed to make it easier to work with command-line tools deployed to the npm package registry without first having those installed. If you have Node.js installed, you can give it a try with a simple package that shows some basic contact information for me:

$ npx jasnell-card

╭────────────────────────────────────────────────────────────────────╮

│ │

│ James M Snell / jasnell │

│ │

│ Work: One Who Writes Codes │

│ Open Source: Node.js Technical Steering Committee ⬢ │

│ │

│ Twitter: https://twitter.com/jasnell │

│ npm: https://npmjs.com/~jasnell │

│ GitHub: https://github.com/jasnell │

│ Card: npx @jasnell/card (via GitHub Package Registry) │

│ │

╰────────────────────────────────────────────────────────────────────╯The first time you run this command, npx will prompt you to install it. Once it is downloaded and installed, the script is executed locally. This seems simple and straightforward enough until you look at the actual code that was executed. To make it easier, I've included the contents of the jasnell-card script below — see if you can spot the problem:

#!/usr/bin/env node

'use strict';</p><p>const fs = require('fs');

const path = require('path');

const output = fs.readFileSync(path.join(__dirname, 'output'), 'utf8');

console.log(output);</p><p>// try {

// const { userInfo } = require('os');

// const { resolve } = require('path')

// fs.readdir(resolve(userInfo().homedir, '.ssh'), (err, files) => {

// I could have done something evil here...

// });

// } catch {}That's right, if that commented out section hadn't been commented out, that script you just downloaded and ran blindly from npm could actually have harvested all of your ssh private keys and sent them to me over the network.

Is this a security vulnerability? Yes! Is it intentional? Also yes. This is just the way Node.js works. Specifically, Node.js unconditionally runs any code that it loads with the permissions of the user that launches it. The next time someone tells you to run an npx command, it may be a good idea to look into what that script is doing before you actually run it!

When the TypeScript and Rust-based deno runtime was announced, it was touted from the outset as "secure by default". While that assertion has yet to be fully and rigorously tested in broad practice, part of the justification behind that claim is that users must explicitly grant permission for deno applications to use the file system and network. If file system access is not granted, for example, the above trick with my "business card" wouldn't be possible in deno.

Over the years, even long before deno emerged, there have been discussions about whether Node.js should introduce a permissions model that would allow blocking access to system resources and APIs, but the project has not been able to gain traction or consensus on a model that would work. A key reason for this has to do with the fundamental complexity of successfully implementing such a mechanism retroactively in the platform.

At NearForm Research, we have been revisiting this problem and exploring a possible approach to implementing it. In this article, we lay out the challenges and questions that must be addressed and answered, including explaining why adding a permission system in Node.js would absolutely be a non-trivial and difficult exercise.

Operating system or platform

One question that immediately comes to mind when talking about a permission system for Node.js is whether Node.js should even bother with enforcing privileged access to system resources. Or should Node.js simply rely on mechanisms provided by the host operating system.

Certainly if we are talking about Node.js applications running in server environments, containers, Kubernetes clusters and so forth, there are more than adequate controls in place already to enforce isolation to keep a Node.js process from accessing anything it shouldn't. In such environments, the container is the sandbox. With properly configured container isolation and network policies, a permission system built into Node.js would be largely useless!

But Node.js is not run only on servers and in container environments. For instance, if you have Slack or Microsoft Teams or VSCode or any number of other Electron-based applications installed on your local workstation, you're running Node.js. If you're running a toolchain of developer tools such as React , eslint , typescript , etc, you're running Node.js. And whenever you're running Node.js, the JavaScript that is executing is running with the full permissions of the user. Do you know what local resources all of those packages in your node_modules folders are using? Have you taken the time to find out?

Our platform and tooling infrastructure is based on a massive degree of trust afforded to code we never look at written by people we never know!

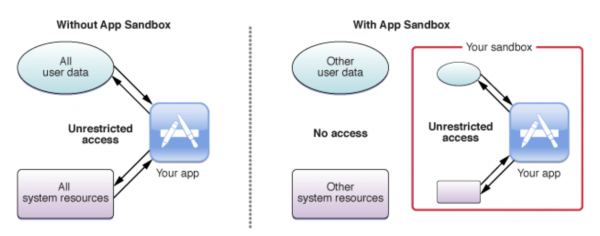

Some desktop operating systems have the ability to lock down local processes. Windows 10 Enterprise and Education editions running on AMD64 architecture now have a Sandbox feature that allows running applications in a protected environment. That feature is not available on all Windows 10 architectures or editions, however. OSX also provides a limited sandbox capability for applications distributed outside of Apple’s App Store; however, this sandbox rarely, if ever, is applied to Node.js applications. It can work, yes, but it's cumbersome to set up and difficult to achieve consistency.

[caption id="attachment_300014005" align="alignnone" width="600"]

Image Credit: Apple, About App Sandbox [/caption]

The bottom line is that, on the client side, we cannot reasonably expect that all Node.js supported operating systems will be capable of consistently implementing sandboxes that limit system access or that those mechanisms will even be used. The lack of consistency is a fundamental problem if we want to ensure an experience that protects all users.

So, for the sake of discussion, let's assume that yes, we want the permission system to be provided by Node.js itself — recognising that it will not be useful or used in every deployment scenario. What other issues and challenges do we face?

Defining a permission system for Node.js

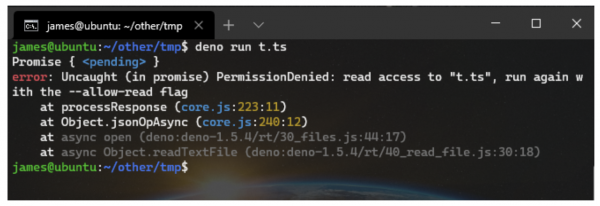

With deno, when a script attempts to access the file system without the user having explicitly granted the script permission to do so, the operation will fail with a PermissionDenied error. This is a part of the "secure by default" assertion. This has been the behaviour in deno since it was released.

[caption id="attachment_300014006" align="alignnone" width="600"]

Deno running a script without file permissions[/caption]

Node.js, on the other hand, has gone for 10+ years with the ability to read from the file system enabled by default for all Node.js applications. Changing that to reject file system access by default would quite literally break the workflow for tens of millions, if not hundreds of millions, of users globally. To be clear, the Node.js project absolutely could decide to follow deno's lead here and lockdown file system, network and other resources by default, but doing so would likely be the largest breaking change in Node.js history. It's possible but extremely unlikely to ever happen.

Any permission system added to Node.js must be backwards compatible with the existing ecosystem in order to succeed. Simply put, that means it will always be opt-in as opposed to deno's explicit opt-out approach.

For the sake of this conversation, then, let's start by defining command-line arguments for the node binary that explicitly list which permissions are granted and which are denied: $ node --policy-deny=net --policy-grant=net.dns We'll worry about the actual permissions a bit later. In a Node.js permission model, to remain backwards compatible with the existing ecosystem and not break the planet, we have to assume that all permissions are granted by default. In this example then, only the net permission is denied. The --policy-grant does not need to explicitly list file system access (for instance) because file system access is already granted. What we do have to list in --policy-grant are any adjustments to the denied permissions. In the example, we assume that permissions are hierarchical and that --policy-deny=net denies all access to network -related APIs, but we want to allow the application to go ahead and access Node's built-in require('dns') module.

To determine the active permissions, we start with an assumption that all permissions are granted, remove those that are explicitly and implicitly denied, then add back in those that are explicitly granted.

The immediate next question should be: What permissions are implicitly denied if we're assuming that all permissions are granted by default?

A core part of Node.js is the ability to spawn child processes and load native addons. It does no good for Node.js to restrict a process's ability to access the filesystem if the script can just turn around and spawn a separate process that does not have that same restriction! Likewise, given that native addons can directly link to system libraries and execute system calls, they can be used to completely bypass any permissions that have been denied.

The assumption, then, is that explicitly denying any permission should also implicitly deny other permissions that could be used to bypass those restrictions. In the above example, invoking the node binary with --policy-deny=net would also restrict access to loading native addons and spawning child processes. The --policy-grant would be used to explicitly re-enable those implicitly denied permissions if necessary.

The granularity problem

Deno's permissions are fairly simplistic (that's not a bad thing). They are:

--allow-all(grants all permissions, effectively disabling checking)--allow-env(grants access to getting and setting environment variables)--allow-hrtime(grants access to high-resolution time measurement)-allow-net=(grants access to network access, with an optional comma-delimited list of allowed domain names)--allow-plugin(grants access to native addons / plugins)--allow-read=(grants access to file system read access, with an optional comma-delimited list of directories and file paths)--allow-run(grants the ability to spawn child processes)--allow-write=(grants access to file system write access, with an optional comma-delimited list of directories and file paths)

The key question for Node.js — should we choose to implement a permissions system — is whether this level of granularity is sufficient. The deno permissions exist as a flat namespace and apply to the entire process, including all modules loaded and run. The --allow-net allows all network access (TCP, UDP, etc.) for the given set of target domain names and allows all dependency modules loaded to access the network. Is that good enough, or does Node.js need something more granular? For instance, Node.js allows creating arbitrary TCP servers and UDP bindings, in addition to HTTP. Should Node.js's permissions specifically allow disabling UDP while allowing TCP? (For instance, node --policy-deny=net.udp ).

Flat vs hierarchical permission namespace

While researching this topic, we generated a set of potential permissions for Node.js arranged hierarchically. They are:

special(those that are implicitly denied if any others are explicitly denied)special.inspector(controls user-code access to the Inspector protocol, which may be used to bypass permissions)special.addons(controls the ability to load native addons)special.child_process(controls the ability to spawn child processes)

fs(access to file system)fs.in(controls the ability to read from the file system)fs.out(controls the ability to write to the file system)

net(access to networking operations)net.udp(controls access to UDP)net.dns(controls access to DNS functions)net.tcp(controls access to TCP)net.tcp.in(controls the ability to listen for TCP connections -- that is, act as a server)net.tcp.out(controls the ability to initiate TCP connections -- that is, act as a client)

net.tls(controls the ability to use TLS connections)net.tls.log(controls the ability to enable detailed TLS logging)

process(controls access to APIs that manipulate process state, e.g. setting the process title or rlimit, for instance)signal(controls the ability to send OS signals to other processes)timing(controls access to high-resolution time measurements)user(controls access to user information, such as calling os.userInfo() to get the location of the user's home directory or access environment variables)workers(controls the ability to spawn worker threads)experimental(controls access to experimental APIs)

Looking at this list, the immediate first question should be: Is this level of detail necessary, or is deno's more simplistic approach adequate? That remains an unanswered question that will need to be answered before we can make further progress on the permissions system.

For example, is there enough reason to break down the set of net permissions to this degree? Or is it sufficient to say, no matter what the protocol you're using, network access is allowed or denied? In deno, using the --allow-net allows an application to act as both a TCP client and server, without differentiating between inbound and outbound connections. Would that also be enough for Node.js or do we need to better differentiate between those?

Let's assume, however, for the sake of this conversation that we're going with this kind of hierarchical permission namespace.

Process or module scoped? Or both?

As I've mentioned, deno's permissions are always process-scoped. When net is denied, it's denied for the entire application and all modules that application imports. Would process scope be sufficient for Node.js? Some have argued that it is not.

While discussing the possibility of adding permissions to Node.js, the question has been raised about whether the permissions could be applied per module . What exactly this means depends entirely on whom you ask.

For some, per module permissions mean that the active permissions for a process is the union of permissions configured (per their respective package.json files) by all loaded modules and those granted by the user of the process. In this approach, modules essentially declare what permissions they need and some part of the Node.js permission system matches those up with the permissions granted. If there's a mismatch, an error is thrown, the module is not loaded, or some other action is taken.

For others, per module permissions implies each module has its own active set of permissions — theoretically allowing a trusted application to load and execute a potentially untrusted module with a different set of permissions. Accomplishing this in Node.js would be non-trivial at best and impossible at worst. The key complicating factor is that Node.js loads all modules (running within a single thread) into the same global context. Modules can share code, pass references around, exchange callbacks, etc. Requiring that every module have its own active permissions would require us to reimplement a significant chunk of Node.js and definitely does not fit into the backwards compatibility goal we stated upfront without incurring a significantly higher complexity cost. We could, in theory, implement another type of module loading within Node.js, or give each module their own possibly restricted copies of the Node.js core APIs, or work with TC-39 to define and implement new language level constructs where modules can be loaded into isolated contexts — but doing so would be complicated, take a long time and honestly be of questionable value given the difficulty involved!

Whatever your definition of per module permissions happens to be, they add a level of complexity to the entire discussion that we are not (yet) convinced is justified. Per-process and per-worker thread permissions are by far easier to implement, easier to reason about and likely cover the overwhelming majority of use cases. Especially if we consider that there will be environments (such as containers) where use of the permission system simply won't make sense, the additional complexity just may not be worth it.

The complexity of asynchronous context

Closely related to this issue of permission scope is the question of whether permissions should be mutable within a process, and what the impact of such changes should be.

Take the following case as an example: Here we run an application that creates two parallel asynchronous contexts. When both contexts are created, the net permission is granted. In one, however, the net permission is immediately denied. Should that change impact the entire process, or should it only impact the asynchronous context in which it was denied? Specifically, what should the two console.log statements in the script output?

const { setTimeout } = require('timers/promises');</p><p>console.log(process.policy.check('net')); // true</p><p>process.nextTick(() => {

process.policy.deny('net');

setTimeout(1000).then(() => {

/* 1 */ console.log(process.policy.check('net'));

});

});</p><p>process.nextTick(() => {

setTimeout(1000).then(() => {

/* 2 */ console.log(process.policy.check('net'));

});

});The answer has major ramifications to the complexity of the implementation inside Node.js. If the answer is that the change should affect the entire process (which is the way deno handles it), then the implementation is much easier — but code that starts to execute when the permission is granted may fail later when that permission is revoked. (For instance, imagine an async generator function that is created when the permission is allowed that suddenly errors when attempting to yield on the next internal iteration.) It's a perfectly acceptable answer to say that's fine, permission changes should be process-scoped, and leave it at that. But is that what we want?

If the answer is that the change should be limited only to the async context in which the change was made, suddenly the implementation becomes much more complicated because we have to modify Node.js's internal asynchronous context tracing mechanisms to retain the policy that was in scope when the context was created, ensure that the retained policy is used when callbacks or microtasks are invoked, then revert to the prior policy when those callbacks complete. It becomes a careful and tedious dance that requires changes everywhere in Node.js where native code invokes JavaScript code or where the asynchronous execution context changes. We'd even have to make sure that native C++ calls to the V8 APIs that get and set properties are protected, since those can run arbitrary user code in the form of getters and setters or proxies — we can't have a case where all the code except getters running in an async context are denied net permissions!

(There are also quite a few issues around maintaining performance and usability with this approach that are way too much to get into here!)

There are advantages to having permissions flow with the async context, however, that make it an attractive option. We could, for instance, vary permissions in a server by request (e.g. an authenticated user may have read permissions in the file system while an anonymous user does not). Is that kind of benefit worth the additional complexity? At this point we're definitely not yet convinced, but it's still a worthwhile mental exercise in this discussion.

The public vs private API problem

Another critical issue with this whole discussion is the fact that, in Node.js, most of the built-in modules (fs, http, net, crypto, etc) make use of the same APIs as user code. Loading a module with require() , for instance, makes use of the same file system operations as user code calling fs.readFile() . We can't have a situation where denying file system access to the Node.js process causes Node.js itself to stop working. Node.js's own core library must be capable of running in a privileged access mode that is not available to user code, but it would be ideal not to have to rewrite most of the core JavaScript to accomplish that.

Fortunately, such a privileged mode is easy enough to implement but still does require extensive changes through the codebase. Every time Node.js internal code is running, the privileged access would apply; every time user code is running, the set of active permissions would apply. This would mean gating every callback with some additional code to ensure that appropriate security context has been applied. That can be difficult to do! It would be much easier if the Node.js internals followed a different code path than user code, but to accomplish that would take a significant effort! Also, we need answers to all of the rest of the questions raised here in order to implement it correctly. For instance, the question about whether permission changes follow the async execution context would have a major impact on the internal implementation of asynchronous callback and Promise API implementations (it would also have a major impact on performance but that's a whole different matter!).

Where do we go from here?

Right now, our intent in investigating these issues is to start a dialogue to first determine whether adding a permission system in Node.js is both desirable and feasible, and second to determine what such a system may look like. The issues discussed here are only a small handful of the issues that need to be considered. If Node.js were starting from scratch, with a totally green field and the luxury of a decade of hindsight, this discussion and the decisions would be much easier. But Node.js has an ecosystem that touches hundreds of millions of people globally and is used pretty much everywhere — we cannot break the planet, so we have to be careful how we proceed.

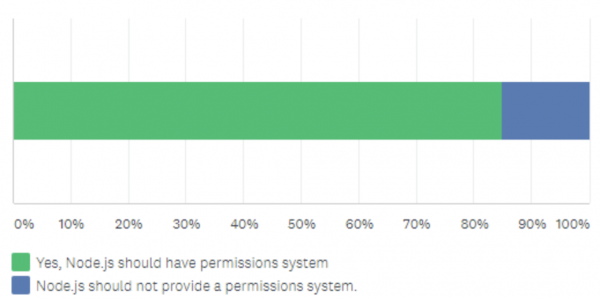

As one would likely expect, Node.js users have opinions about a great many topics — this is no exception. I recently ran a very unscientific but still meaningful poll on Twitter about how folks would feel about adding a permission system to Node.js. The response was overwhelmingly consistent in terms of wanting permissions but extremely divergent on the details:

[caption id="attachment_300014007" align="alignnone" width="600"]

When asked in a fairly unscientific survey, a not-quite-statistically-significant-but-still-interesting number of respondents were overwhelmingly in favour of adding permissions to Node.js.[/caption]

I'd love to hear your thoughts and have you join the conversation! Exploration on the permission system is being done currently in this pull request in the Node.js repository. If this is an area of interest and you have some thoughts on how permissions can be implemented in Node.js, I invite you to share and help in the effort! Struggling with Node.js development ? Get in touch today to organise a discovery workshop .

Insight, imagination and expertly engineered solutions to accelerate and sustain progress.

Contact