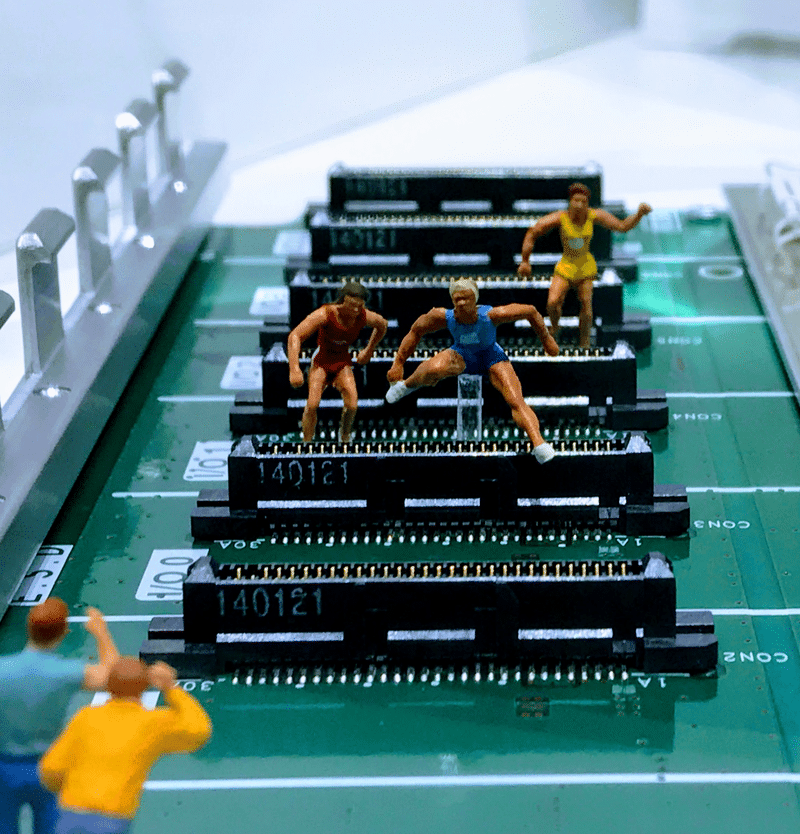

Avoiding Common Hurdles in Unit Testing

Increase unit testing ROI with these tips

Why do developers skip writing tests for some code? Perhaps because it relies on the file system or an external API. Perhaps because we think it’s too difficult, or a test account to an external API is not guaranteed to be available. Perhaps it’s because an external API may not be accessible from the CI environment due to VPN restrictions. Perhaps mocking in your test suite has become so complicated that maintaining the mocks is not yielding enough return for the investment of time. This can happen because mocking is too tightly coupled to the code being tested.

Sometimes testing every branch seems like an insurmountable obstacle. Knowing how to easily test error conditions makes increasing branch coverage a lot easier.

Better Mocking

Unit tests isolate the code being tested from other systems. This lets you focus on your code and not the interactions with other systems. One way to isolate the unit being tested from other systems is to use mocking.

Mocking can be terrible! That is, if it is too tightly coupled with the code being tested. Keeping all mocks up to date can be a huge task. For instance, tests that check that a mocked function f was called n times are too tightly coupled!

Good mocks mimic systems rather than mimicking the behaviour of the code under test. Mock servers that can be accessed over localhost are often the easiest to use. In this scenario, the code under test can remain unchanged, but configuration can be updated to point clients to the mock server running on localhost.

Sometimes mocks are readily available as NPM modules. For example, s3rver is a mock server for Amazon’s S3 service.

const Aws = require('aws-sdk')

const S3rver = require('s3rver')

const Util = require('util')</p><p>const createMockS3Server = () => {

return new Promise((resolve, reject) => {

const mock = new S3rver({

port: 4569,

hostname: 'localhost',

silent: true,

directory: '/tmp/s3rver'

}).run((error) => {

if (error) {

reject(error)

return

}

resolve(mock)

})

})

}</p><p>Aws.config.s3 = {

s3ForcePathStyle: true,

endpoint: 'https://localhost:4569'

}</p><p>test('starts s3rver', async () => {

const mockS3Server = await createMockS3Server()

await s3Client.createBucket({ Bucket: namespace }).promise()

await testCodeThatUploadsAFileToTheBucket()

await Util.promisify(mockS3Server.close)()

})Unfortunately in many situations, the service being tested may not have a mocked version created yet. The yakbak module can be a useful tool in this situation. It lets you record HTTP responses for later use; without the need to make an additional network request.

This lets you create basic mock servers for your unit tests. For almost all projects, you can assume that the Node.js HTTP module and network interface are well tested and there is no additional benefit to testing them again.

Another benefit of using yakbak is performance. Test suites that run in less time can be run more often. They can even be run when no network interface is present, meaning developers can continue working even if external APIs are unreachable for any reason.

const Http = require('http')

const Yakbak = require('yakbak')</p><p>const allowMockServerRecordings = !process.env.CI</p><p>const createMockNpmApi = () => {

return Http.createServer(

Yakbak('https://api.npmjs.org', {

dirname: Path.join(__dirname, 'npm-api-tapes'),

noRecord: !allowMockServerRecordings

})).listen(7357)

}</p><p>test('Can extract module info', async () => {

const mockNpmApi = createMockNpmApi()</p><p> try {

await doSomethingWithNpm('https://localhost:7357')

}

finally {

await mockNpmApi.close()

}

})Any requests that are made are first checked to see if there is a locally cached response in the test/npm-api-tapes directory. These are generated files so you may want to add this directory to your .eslintignore file if you use ESLint.

Any requests that are not cached are made to the live server and new tapes are recorded. These must be checked for validity and committed to the repository if the request is expected and valid. Or you may notice a tape for a request you didn’t expect. If so, the tape can be deleted and the bug fixed. You can keep track of which calls are being made because a new tape is added for each new request.

The CI environment variable is used to define whether code is running in a continuous integration (CI) environment such as CircleCI . In that environment the variable is set and no new tapes are created. Instead, an error is thrown. This prevents false positives during CI testing for unexpected requests. Any tapes not expected in CI usually indicate an error or a scenario not considered during test writing.

Creating a mock server with yakbak can be the basis of creating a more abstract mock server later. An example of this is mock-okta . This tool consists of a number of yakbak tapes, but with one endpoint mocked with custom code.

It would be a security risk if Okta allowed private keys to be retrieved by a public API endpoint. But for local tests we may need access to the private key used to sign JWTs. (JWTs, or JSON Web Tokens, are an open method for creating access tokens that are usually used to authenticate web endpoints.) mock-okta adds some additional code on top of the yakbak tapes to enable working with private keys local to the tests, so that JWTs can be created locally (and definitely not remotely).

Mocking Modules

Another approach that is used when a readymade mock for a dependency is not available, or when the dependency does not use HTTP as the means of communication, or when the dependency that needs to be mocked is not a server at all, is to use proxyquire. It is a module that is used in tests to mock dependencies at the require level. When the code under test tries to require the external dependency, it receives the mocked version instead:

const Faker = require('faker')

const NoGres = require('@nearform/no-gres')

const Proxyquire = require('proxyquire').noPreserveCache().noCallThru()</p><p>test('counting users works', async () => {

const mockPgClient = new NoGres.Client()

mockPgClient.expect('SELECT * FROM users', [],

[{ email: Faker.internet.exampleEmail(), name: Faker.name.firstName() }])</p><p> const User = Proxyquire('../user', {

pg: {

// `Client` can't be an arrow function because it is called with `new`

Client: function () {

return mockPgClient

}

}

})</p><p> expect(await User.count()).to.equal(1)

mockPgClient.done()

})In order to isolate state to the test function, noPreserveCache() is called, so that each time proxyquire is used the module is loaded afresh. Note that any side effects of the module loading occur in each test when using noPreserveCache() .

In this example, noCallThru() is also used. This is important when an unexpected call may be destructive. For example, if an SQL DROP TABLE statement was accidentally executed against your local Postgres DB. Using noCallThru() causes an error if any functions that have not been mocked are called.

The faker module is also used in this example. It has a great suite of functions that can be used to generate dummy data, so that tests can be more robust. For example, if your test always uses a fake email like alice@example.com , and there is an error only when the email contains an underscore, that issue might not be found before the next release. Using faker, more data shapes can be tested, increasing the likelihood of finding the bug before the next version of the software is released.

File System

Code that tests the file system may seem difficult to test at first, but there is a readily available NPM module called mock-fs that makes it easy.

const Fs = require('fs')

const MockFs = require('mock-fs')</p><p>test('It loads the file', async () => {

try {

MockFs({

'path/to/fake/dir/some-file.txt': 'file content here'

});</p><p> const content = Fs.readSync('path/to/fake/dir/some-file.txt')

expect(content).to.equal('file content here')

}

finally {

MockFs.restore();

}

})Dates and Timers

There’s also a module that helps testing code that relies on timers and other date related features of JavaScript. It is called lolex.

const Lolex = require('lolex');

const Sprocket = require('../sprocket')</p><p>// Sprocket.longRunningFunction may look something like:

// const longRunningFunction = async () => {

// await new Promise((resolve) => setTimeout(resolve, 900000))

// return true

// }</p><p>test('a long running function executes', async () => {

const clock = Lolex.install();</p><p> try {

// Date.now() returns 0

const p = Sprocket.longRunningFunction()

clock.tick(900000)

// Date.now() returns 900000

const status = await p

expect(status).to.equal(true)

}

finally {

clock.uninstall()

}

})In this example, the code for longRunningFunction has to wait 15 minutes before returning its value. Obviously, waiting 15 minutes each time longRunningFunction is called from a test is far from ideal! By mocking the global setTimeout function, we can avoid waiting at all and instead advance the clock 15 minutes to assure the expected value is returned.

Code Coverage

Code coverage does not show that code executes as expected like unit tests do. Code coverage shows that the code being tested runs, does not timeout, does not cause unexpected errors, or in the event of an error, that it is caught and handled as expected.

While there are differing opinions on what level of code coverage is desirable and whether there are diminishing returns when moving from say 90% to 100% code coverage, many developers agree that code coverage tests are beneficial.

Just loading your code gets you 60-70% code coverage, so we are really only talking about coverage for that last 30-40%. Using smart fuzzing can boost that starting point a little more towards 100%, with little additional effort on your part.

Smart Fuzzing

According to MongoDB, they find many more bugs before they reach production by using smart fuzzing than they do with unit tests (see here ). While MongoDB uses an advanced smart fuzzing technique that mutates unit test code, we do not have to go this far to benefit from smart fuzzing. By using Hapi ’s built in validation functionality to define what requests and/or responses look like, we not only get the benefit of validation we can also use this information to run smart fuzzing tests.

const createServer = async () => {

const server = Hapi.server({ port: 7357 })</p><p> server.route({

method: 'GET',

path: '/{name}/{size}',

handler: (request) => request.params,

options: {

response: {

sample: 10,

schema: Joi.object().keys({

name: Joi.string().min(1).max(10),

size: Joi.number()

})

},

validate: {

params: {

name: Joi.string().min(1).max(10),

size: Joi.number()

}

}

}

})</p><p> await server.start()

console.log('Server running at: ${server.info.uri}')

return server

}</p><p>test('endpoints do not time out and any errors are caught', async () => {

const testServer = await createServer()

try {

await SmartFuzz(testServer)

}

finally {

await testServer.stop()

}

})

})In the same amount of time I spent trying to set up swagger-test or got-swag for use within ‘test .js’ files using authenticated requests, I had already written the first iteration of SmartFuzz. It is important to have tools, when at all possible, that can be used from within Node.js test code as it makes creating your test environment a lot easier. While these are probably both decent command line tools, it is less convenient that they cannot be easily dropped into ‘test .js’ files.

Testing Error Handling

Also related to code coverage, testing branches is often the “last mile” of coverage testing. Often these branches are error handling cases, adding to the difficulty. Testing the code under error conditions leads to testing more branches.

Before we test error conditions, a quick note. Sometimes people are confused if error messages are output during testing. However, it is important to test error conditions even if they cause output. One solution is to stub out console.log and console.error to hide the messages, but I prefer to see the output, to help debugging any bugs that are found. So, let’s add a helpful message for people who may be alarmed:

console.warn('?? Note: error message output is expected as part of normal test operation.')Most assertion libraries include code to test for expected promise rejections. For whatever reason, I have not been successful in making these work. In practice I have used this function:

const expectRejection = async (p, message) => {

try {

await p

} catch (error) {

if (error.message === message) return

throw new Error('Expected error "${message}" but found "${error.message}"')

}</p><p> throw new Error('Expected error "${message}" but no error occurred.')

}Using this function makes testing error conditions easier. When it is easy, there is no excuse not to test many more branches in the code, to increase code coverage greatly, while also testing the code at the time it is most vulnerable, when an error has occurred.

test('invalid input causes an error', async () => {

await expectRejection(doSomeTest({ input: 'invalid' }), 'Validation failed.')

})Conclusion

Ready to increase test ROI? Put these tips into practice today. Don’t let external systems, complicated mocks, branch testing, or low code coverage stand in the way!

Need help developing your application? Get in touch with us today!

Insight, imagination and expertly engineered solutions to accelerate and sustain progress.

Contact