Part 2 of a 6 Part Series on Deploying on Google Cloud Platform

We recently did five mock deployments on Google Cloud Platform using different methods in an effort to understand the nuances associated with each method.

The five methods we investigated were:

- Google Compute Engine

- Google Kubernetes Engine

- App Engine

- Cloud Run

- Cloud Functions

We will be examining each of the 5 approaches in this series and wrap it all up with an article that talks about our findings in the context of all five approaches.

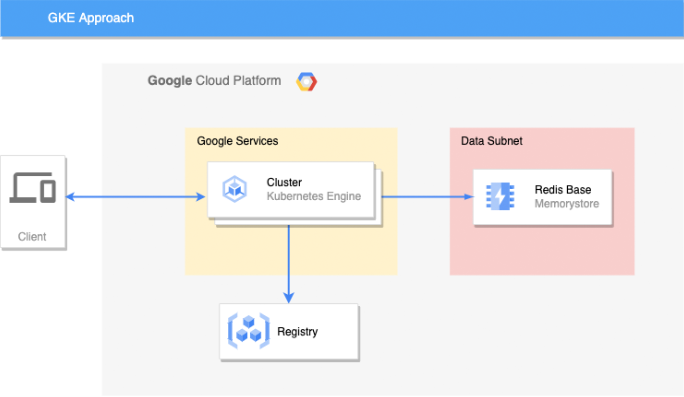

For our second article in the series we will be deploying an application on Google Cloud Platform using Google Kubernetes Engine.

Deploying with Google Kubernetes Engine

Google Kubernetes Engine (GKE) is the CaaS (Container as a Service) solution provided by Google Cloud Platform.

It allows users to easily run Docker containers in a fully-managed Kubernetes environment.

It takes care of the automation, management, and deployment of containers, their networking, storage and resources.

Google Kubernetes Engine leverages Kubernetes features that help users manage many moving parts of large applications, like Horizontal Pod Autoscalers, which manage replicas of the same pod throughout the available instances in the cluster.

It also benefits from Kubernetes Services for handling internal networking, and so on.

More GKE features can be found at https://cloud.google.com/kubernetes-engine .

When to use GKE

You should use GKE when you need to manage Docker containers in a production environment without worrying too much about networking, node allocation and scaling features.

It is a good option for huge applications with many services that need to connect to each other.

It is also a wise option for when the team doesn’t have much SysAdmin knowledge since it can be configured to automate tasks like upgrading software, internal networking and so on.

Its console makes it easy to manage the application lifecycle, provision new instances and even restart / update applications.

How we implemented it

For this solution we used a terraform project that declares the following resources:

- A VPC with a subnet for running the instances

- A Google Cloud Artifacts repository for storing the application container image

- A Redis instance to store data

- A Kubernetes deployment, service, secret and load balancer for running the application

Main Components

Redis Here is the Terraform block declaring the Redis instance.

resource "google_redis_instance" "data" {

name = "${var.app_name}-redis"

region = var.region

tier = "BASIC"

memory_size_gb = var.regis_memory_size_gb

authorized_network = google_compute_network.vpc.id

connect_mode = "PRIVATE_SERVICE_ACCESS"

}Cluster Here we declare the cluster setting the region, network, subnetwork.

resource "google_container_cluster" "k8s_cluster" {

name = "${var.app_name}-k8s-cluster"

location = var.region

network = google_compute_network.vpc.id

subnetwork = google_compute_subnetwork.app.id

ip_allocation_policy {

cluster_secondary_range_name = "services-range"

services_secondary_range_name = google_compute_subnetwork.app.secondary_ip_range.1.range_name

}

remove_default_node_pool = true

initial_node_count = 1

}Node Pool Here we declare the node pool with custom configurations, mainly the preemptible option.

resource "google_container_node_pool" "k8s_cluster_preemptible_nodes" {

name = "${var.app_name}-node-pool"

location = var.region

cluster = google_container_cluster.k8s_cluster.name

node_count = 1

node_config {

preemptible = true

machine_type = var.app_machine_type

service_account = google_service_account.app.email

oauth_scopes = [

"https://www.googleapis.com/auth/cloud-platform"

]

}

}Ingress Controller Here we declare the helm chart for installing the ingress controller in the cluster.

...

resource "helm_release" "nginx" {

depends_on = [

google_container_cluster.k8s_cluster

]

name = "ingress-nginx"

namespace = kubernetes_namespace.nginx.metadata[0].name

repository = "https://kubernetes.github.io/ingress-nginx"

chart = "ingress-nginx"

version = "4.1.4"

set {

name = "controller.service.loadBalancerIP"

value = google_compute_address.app_static_ip.address

}

}Other components The complete solution with other components can be found in our repository: https://github.com/nearform/gcp-articles

Pros & Cons

| Pros | Cons |

|---|---|

| Deploy Docker containers to production fast and easily | Requires some Kubernetes knowledge |

| It is highly-available by nature, especially for microservices | The high level of abstraction can sometimes take some part of the control from the user |

| It can handle application autoscaling natively using HPA | It’s more expensive to run a cluster in GKE than the same set of applications in GCE |

| Communication between applications in the cluster is easy using Kubernetes Services | It’s not as customizable as other solutions since some features are managed by Google |

| Easier to deploy and manage than other technologies, like kops | |

| The Control Plane allows the user to manage pretty much everything on the cluster | |

| It usually requires less manual work than other solutions, like GCE |

Check back each week for the next instalment in the series.

Insight, imagination and expertly engineered solutions to accelerate and sustain progress.

Contact