Part 1 of a 6 Part Series on Deploying on Google Cloud Platform

We recently did five mock deployments on Google Cloud Platform using different methods in an effort to understand the nuances associated with each method.

The five methods we investigated were:

- Google Compute Engine

- Google Kubernetes Engine

- App Engine

- Cloud Run

- Cloud Functions

We will be examining each of the 5 approaches over the coming weeks and wrap it all up with an article that talks about our findings in the context of all five approaches.

For our first article in the series we will be deploying an application on Google Cloud Platform using Google Compute Engine.

Deploying with Google Compute Engine

Google Compute Engine (GCE) is the IaaS (Infrastructure as a Service) solution that Google Cloud Platform provides.

GCE provides hardware-specific resource allocation, such as RAM, CPU and storage. It allows the user to fully manage their instances, what software is installed, how services are run and how the instances connect to other instances and the internet.

GCE provides pre-made OS images within its store and allows users to create and manage OS images as well. It also allows its users to create preemptible instances, which work the same as normal instances but are better cost-wise, provided that the application is fault-tolerant (those instances can be shut down by Google in case of high demand).

Another interesting feature is Instance Groups, which allow the user to manage and run more than one instance with the same image and configuration and makes it easy to manage auto-scaling and load-balancing.

More of its features can be found here .

When to use GCE

You should use GCE when you need an IaaS (Infrastructure as a Service) solution for your application. It is more suitable for low-complexity applications, such as websites, small apps or even huge monolithic applications, depending on team size, or if the team is using IaC (Infrastructure as Code) and/or Configuration Management software since it can be problematic to manage many instances that serve the same purpose.

GCE is also a wise and affordable option when the application needs to be available 24/7, with instant responses.

How we implemented it

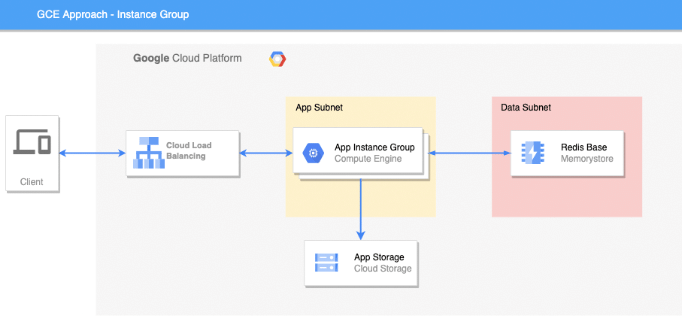

For this solution we used a Terraform project that declares the following resources:

- A VPC with a subnet for running the instances

- A bucket to store the application executable

- A Redis instance to store data

- An instance template, group manager, auto-scaling and load balancer for running the application

The project contains a simple Counter Application consuming a Redis Memory Store.

Main Components

Instance Group In the template we declare the machine type, region, disk, network and startup script . The script installs gcloud-cli , downloads the executable from the bucket and executes it.

resource "google_compute_instance_template" "instance_template" {

name_prefix = "${var.app_name}-tmpl"

machine_type = var.app_machine_type

region = var.region

...

metadata_startup_script = <<-USERDATA

#!/bin/bash

...

gsutil cp gs://${google_storage_bucket.app.name}/3t-app 3t-app

...

./3t-app

USERDATA

}Instance Group Manager In Instance Group Manager we declare the size (target_size) of the group, the template to be used and the named ports, in our case the application uses 8080.

resource "google_compute_region_instance_group_manager" "app" {

name = "${var.app_name}-mig"

base_instance_name = "${var.app_name}-srv"

target_size = 2

version {

instance_template = google_compute_instance_template.instance_template.id

}

...

named_port {

name = "http"

port = 8080

}

}Auto Scaler In Auto Scaler we define the target group and the autoscaling policy.

In this case the autoscaler is configured to scale up when the instances push the cpu utilization above 50%.

The min replicas config is set to 2 and max replicas config is set to 5.

resource "google_compute_region_autoscaler" "app" {

name = "${var.app_name}-auto-scaler"

region = var.region

target = google_compute_region_instance_group_manager.app.id

autoscaling_policy {

max_replicas = 5

min_replicas = 2

cooldown_period = 60

cpu_utilization {

target = 0.5

}

}

}Load Balancer To set up the load balancer we use the official GCP module.

We declare the backend pointing to the named port in the instance group (http).

module "app_lb" {

source = "GoogleCloudPlatform/lb-http/google"

name = "${var.app_name}-lb"

firewall_networks = [google_compute_network.vpc.name]

project = var.project_id

backends = {

default = {

protocol = "HTTP"

port = 8080

port_name = "http"

health_check = {

request_path = "/health"

port = 8080

}

groups = [

{

group = google_compute_region_instance_group_manager.app.instance_group

}

]

...

}

}

}Other components The complete solution with other components can be found in our repository: https://github.com/nearform/gcp-articles

Pros & Cons

| Pros | Cons |

|---|---|

| Launch VMs on demand | Usually requires SysAdmin knowledge |

| Usually cheaper than other alternatives for software that runs 24/7 | Its SLA agreement for a single instance is 99.5% uptime |

| Fully configurable hardware resources, networking and storage | More manual work than using other options, like GKE |

| Lots of premade instance images are available on the GCE store | It takes longer to build a complex architecture than with other options like GKE |

| Autoscaling can be configured with meaningful metrics, like requests per second | Harder to keep instances’ OS and software up-to-date |

| A single instance can run lots of applications, as well as monitoring and APM tools |

Check back each week for the next instalment in the series. Related Read: Using Fastify on Google Cloud Run

Insight, imagination and expertly engineered solutions to accelerate and sustain progress.

Contact