Mapping a compliant AI strategy amid regulatory uncertainty

Given the security and regulatory implications of leveraging AI, organisations face increasing urgency to develop a sophisticated strategy

The world’s first binding law on artificial intelligence (AI), the EU’s Artificial Intelligence Act, which came into force on came into force on 1 August 2024, is predicted to cause ripple effects for all global companies engaged in the development and use of AI. Soon it will likely be followed by regulations and policies developed by additional governments, in countries such as the UK, the US, China, and Japan.

With so much uncertainty around, leveraging AI in a way that keeps enterprises and stakeholders safe from potential legal ramifications can be daunting. Our article eases this fear by giving you expert information and insights to help you map a compliant AI strategy.

Shifting from “analysis paralysis” to positive action

Since organisations around the world are at different stages of development with their artificial intelligence systems, many of them have concerns about how to develop secure systems that share data effectively and also maintain data privacy.

| Nearform expert insight: | “Organisations certainly need someone accountable to manage a rapidly evolving AI policy and data strategy. Because there's no stopping this [AI] boat. You’ve got to get the team on it, and make sure that you have a direction, that you're not just running rudderless into the ocean into the tidal wave.” Joe Szodfridt, Senior Solutions Principal, Nearform |

Adding to the uncertainty is the fact that in 2024, a record number of political elections will be held in more than 40 of the world’s democracies. Approximately 4 billion people, or about half of the world’s population, will be eligible to go to the polls. The candidates they choose may affect the direction of AI legislation for years to come. Given that the complexion of numerous governments can change, it's fair to say that the frameworks of AI legislation right now have even less certainty than businesses would like.

What businesses can be certain of is the role of AI governance frameworks in ensuring secure and regulated AI deployment — not only will it be huge, it will be a "separator" in what is a very crowded market. Organisations that proactively develop or refine AI applications in a flexible and agile fashion will be positioned to move ahead of those that don’t, because they will be more readily able to adapt as governments, and regulations change and evolve.

In this landscape, companies, especially those in highly regulated industries, would be wise to consult with technology partners who know how to build agile tools and processes. AI and data experts can help to collaboratively map a coherent AI strategy that meets organisations where they are, and help them build solutions that will scale to thrive regardless of the regulatory environment. And since the creation of new company AI policies requires additional capability and updated market and technology awareness that the company may not have, hiring an experienced technology partner is advisable.

| Expert insight: | “We finally have the world’s first binding law on artificial intelligence, to reduce risks, create opportunities, combat discrimination, and bring transparency. Thanks to Parliament, unacceptable AI practices will be banned in Europe and the rights of workers and citizens will be protected. The AI Office will now be set up to support companies to start complying with the rules before they enter into force. We ensured that human beings and European values are at the very centre of AI’s development.” Brando Benifei, Market Committee co-rapporteur, EU Parliament |

| By the numbers: | - In 2023, there were 25 AI-related regulations in the US, up from just one in 2016. - Last year alone, the total number of AI-related regulations grew by 56.3%. - 80% of data experts agree that AI is increasing data security challenges. |

Defining the principles of AI policy

Before exploring the effects of AI regulation, pausing to first understand the goals of government legislation can provide helpful context.

UNESCO defines the four key values at the foundation for the development of AI systems, saying they should “work for the good of humanity, individuals, societies and the environment.” The values identified by UNESCO are:

Human rights and human dignity: Respect, protection and promotion of human rights and fundamental freedoms and human dignity

Living in peaceful just, and interconnected societies

Ensuring diversity and inclusiveness

Environment and ecosystem flourishing

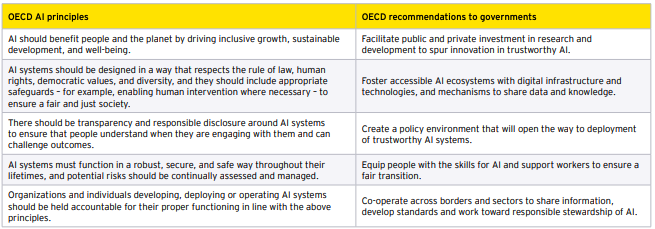

Additionally, the OECD has adopted a global benchmark to help guide governments toward promoting a human-centric approach to AI development. These principles were adopted by the nations of the G20 in 2019, and are guiding the way the EU and other governments are crafting their policies.

Graphic Source: The Artificial Intelligence (AI) global regulatory landscape

Individual governments are moving — at varying paces — to develop legislation that addresses issues related to AI ranging from risk and transparency, to bias and discrimination, to privacy and security. The diversity of stakeholders, as well as the myriad of potential impacts, associated with AI introduces a level of complexity that can be difficult to address in any single piece of legislation.

The role of AI governance in secure deployment

AI governance is an organisation’s strategic framework that encompasses policies, principles and practices essential for guiding the development, deployment and utilisation of artificial intelligence technologies. This governance includes not only compliance with applicable government regulations, but also compliance with company-specific policies.

Implementing proper AI governance is crucial, because it ensures that AI development progresses in line with corporate strategy in a way that protects key stakeholders. Oversight is a key part of this framework, so companies may require clear observability into how their AI products and systems are developed and built.

Companies may seek to ensure that AI tools are transparent, accountable, and explainable. This framework can go beyond compliance, and attempt to foster trust and confidence in AI technologies among stakeholders.

Having a robust AI governance framework not only helps to avoid financial penalties, it also demonstrates trustworthiness to potential customers, which can help an organisation gather positive public sentiment and stand out from competitors.

How risky is your AI?

Gartner* reports that the EU’s Artificial Intelligence Act is likely to disrupt technology companies well beyond the borders of the European Union. Being one of the first comprehensive regulations, it may serve as a template for other countries to adopt and adapt.

The act goes further than the principles outlined by the OECD, and defines four specific risk categories for applications of AI from “unacceptable” to “minimal”.

“Unacceptable Risk” includes systems that provide biometric information in real-time, tools that gather facial images from the internet or from public video surveillance systems to create facial recognition databases and tools that make conclusions about people’s emotions in the workplace. These will be banned in EU markets.

“High risk” products, including those deemed to “have the potential to cause harm to health, safety, fundamental rights, environment, democracy and the rule of law” will be highly regulated, but allowed under certain circumstances.

Companies creating products in the “Limited risk” category, which includes chatbots and deepfake generators, will be required to inform users that they are interacting with an AI system, and ensure that all AI-generated content is labelled.

“Minimal risk” products, which include spam filters and recommendation systems will not have any restrictions or requirements.

In the United States, the Biden administration has created a blueprint for an “AI Bill of Rights”, which reflects many of the OECD’s principles. Additionally, some US lawmakers have stated that existing laws and regulations already provide significant authority to address bias, fraud, anti-competitive behaviour and other potential risks caused by AI. The White House Office of Science and Technology Policy (OSTP) coordinates the federal government’s efforts to set AI policy through the National Artificial Intelligence Initiative Office, which was established by the US Congress in 2020. Though no specific date for release has been set, the OSTP has announced that it will release a “National AI Research and Development Strategic Plan” to encourage AI development that “promotes responsible American innovation, serves the public good, protects people’s rights and safety and upholds democratic values.”

Due to the EU’s AI act, and the ongoing development of guidelines and regulations by other countries, leaders of tech organisations would be advised to evaluate their own exposure. This process can be aided by engaging with an experienced technology partner that is familiar with addressing AI governance and risk management.

Assessing AI compliance requirements

Robust AI risk management measures involve employees throughout all levels of an organisation’s org chart. Accountability and transparency are key, such that applicable governance frameworks, responsibilities, and controls can be in place from the top levels of leadership to then cascade through management and operations.

The first step in assessing compliance requirements is to identify what AI and policies are in effect, or about to go into effect, where the business operates and does business. Best practices indicate that companies engaged in the creation of AI-enabled services to identify, manage and reduce AI-related risks by:

Having a clear line of accountability to individuals responsible for AI risk management.

Identifying AI operators within an organisation who can explain the AI system’s decision framework and how it works.

Establishing an AI ethics board to provide independent guidance to management on ethical considerations in AI development and deployment.

| Nearform expert insight: | “When you’re trying to determine if an AI process you’re using, or want to use, will comply with regulations, the first step is to list the use cases where the AI will help your business be more efficient. Then, identify the data that has to go along with those use cases and see what protections or policies or risks there are around that data currently. Figure out what you want to do, what data is involved, and what risk exposure you have with that data. Then you can decide how to restrict access to that data, or when to make it available to the system.” Joe Szodfridt, Senior Solutions Principal, Nearform |

Developing internal AI policies

Any organisation employing AI tools will need to establish a clear set of guidelines and policies for their teams and stakeholders. This is separate from, but relevant to, any applicable government regulations around the use of AI in their industry. These internal policies should detail all key information that employees at all levels need to know about what uses of AI are permitted and in what area/context, and what (if any) limitations there are around it. This information comes from a framework agreed upon by stakeholders representing all key parts of the business. As use of AI tools within the company changes, and as external guidelines and regulations evolve, this policy should evolve as well.

Key components of a robust internal policy can include:

Detailed description of what (if any) AI tools are permitted, and for what tasks.

Clear list of security risks including what type of information should not be used with an AI system. Reminders to protect sensitive data, and not to share unapproved proprietary company information with any AI platforms.

Mandated human review of AI-generated outputs. A review procedure should be established in which all AI output is reviewed by an employee for accuracy and/or bias.

It is important to remember that humans are a key part of any use of artificial intelligence systems. AI tools exist to remove human drudgery and amplify human capability, so for responsible development of the technology, all guidelines and policies have the opportunity to highlight the safety, security, and well-being of human employees and customers.

Beyond internal policy, something that can help streamline the process of implementing compliant AI systems is to build agile tools that can be modified as circumstances and regulations change. It’s even possible to leverage intelligent tools to make the process less manual. An example of this is to provide internal users with a set of profiles they can navigate through to determine if any given situation and use case fits established guidelines and policies. This kind of insight can save time, money and frustration, and make the process of mapping a coherent and compliant AI policy much less daunting.

| Nearform case study: | Financial services company |

|---|---|

| Issue: | A US-based financial services institution with annual revenue of over $100 million and hundreds of employees needed to determine its regulatory exposure due to using Microsoft Office 365 CoPilot’s AI tools. It wanted to continue using CoPilot, but needed to determine if/where it could use it and stay in compliance with data use regulations. |

| Solution: | Nearform’s experts: - Convened a group of senior stakeholders representing different functions including enterprise risk, information security and product. - Gathered information about relevant regulations and policies from hundreds of different sources including EU regulations, best practices from the US and policy recommendations from NIST (National Institute of Standards and Technology). - Determined relevant best practices, reviewed potential future regulations, identified the need for additional data strategy. - Built consensus among the stakeholders regarding what they wanted to accomplish, as well as their comfort level with risk. - Developed a roadmap for creating a robust data strategy, and approved use cases for the data and MS CoPilot. |

| Impact: | - Permissions were established for client teams to use CoPilot’s AI for all types of informational purposes. - New policy was established to prohibit using AI to modify any type of business-critical transactions. - Implemented a data strategy plan, including defining: what data could be accessed by AI tools, who has access to what data and restricting AI from anything relating to a transaction. - Designed an incremental process to establish a software supply chain, alert governing bodies and gain the necessary executive sponsorship to evolve the policy moving forward. Note: This process was completed in approximately 90 days |

Expert partners can derisk AI compliance

In working with organisations in some of the most heavily regulated sectors — financial services companies, healthcare businesses and government agencies — Nearform has helped to develop tools and capabilities that refine their digital strategies and streamline their processes.

Given the security and regulatory implications of leveraging AI, organisations face increasing urgency to develop a sophisticated strategy, as well as the ability to implement it. Mistakes can be costly, exposing the company to the risk of being out of compliance and susceptible to poor public opinion and even regulatory fines and other punishments.

Experienced partners like Nearform can leverage a track record of success in accelerating how enterprises gain AI’s business benefits, while also providing guidance in how to optimise products, processes, policies and more — to remove confusion and guesswork in favour of a strategic adoption of AI. Contact Nearform today to discuss how we can help your enterprise.

*Data source: EU’s New AI Act Will Disrupt Tech CEOs in Europe and Beyond — Source = Gartner, Published 23 May 2024 - ID G00816805 - GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

Insight, imagination and expertly engineered solutions to accelerate and sustain progress.

Contact