Reaching Ludicrous Speed with Fastify

In this post, we're going to take a look at Fastify, a high-performance HTTP framework for Node.js written by Matteo Collina and Tomas Della Vedova . Correction: an earlier version of this article was published with diagrams that reported incorrect benchmark results. The benchmarks had been run with 10 connections instead of 100 and included data for an older version of hapi. These issues have been corrected in the current version of the article. See fastify/benchmarks#39 and fastify/fastify#545 .

Benchmarking Web Frameworks

To better understand where different frameworks stand in terms of performance, we'll begin with some load testing. Fastify provides a number of basic benchmarks for different frameworks, and different configurations.

We're going to see how Fastify stacks up to some of the most popular frameworks in use today: Express , hapi , Restify , and Koa .

Each server is set up with one route, GET / . The route handler serialises the object { hello: 'world' } and sends it to the client as JSON.

Our baseline will be a simple http.Server instance without any router. We're using the default router for each framework, but as Koa doesn't supply a router by default, we're using koa-router .

To conduct the benchmark, we're going to use Autocannon with command-line options autocannon -c 100 -d 40 -p 10 .

-c 100indicates we want to have 100 concurrent connections-d 40indicates we want the benchmark to run for forty seconds-p 10indicates we want to share one socket connection for every ten requests (HTTP pipelining)

We're repeating the Autocannon command and taking the second average as our result. The first time we invoke autocannon , V8 (the JavaScript engine behind Node) performs optimisations to maximise runtime performance. Using the second result for comparison gives us a more realistic idea of performance. (All the benchmarks in this post were run on an Amazon EC2 m4.xlarge instance, with an Intel Xeon E5-2686 v4 @ 2.30GHz (4 cores, 8 threads) and 16GiB RAM. We used Node 8.4.0, and the actual code for the tests lives in https://github.com/fastify/benchmarks.)

Reaching Ludicrous Speed

The benchmarks above demonstrate a very simple use case. Yet, Express processes 42.97% fewer requests per second than http.Server , and that's without any additional middleware or external I/O. How does Fastify manage to squeeze out an extra 51.37% requests per second when compared with Express?

Closures

Compared with other frameworks, Fastify has a much smaller call stack when dealing with a request. In the presentation at the end of this blog post, Matteo provides flame graphs to show both the call stack of different frameworks and what functions take the most time to complete. Reusify is used to squeeze a 10% increase in performance when handling middleware. To read more about how reusify works, check out Matteo's post about both reusify and Steed.

Routing

One of the key areas of optimisation was in routing. Internally, Fastify uses a radix tree based router called find-my-way . Compared with routers used by other frameworks, find-my-way has a clear advantage in the amount of operations per second it can perform.

To compare how the two perform against each other, we're using router-benchmark to benchmark the most commonly used routers.

find-my-way supports simple path strings, wildcards, named parameters, and regular expressions (though they add some additional overhead). Check it out on GitHub to find out more.

JSON Serialisation

JSON.stringify() is one of the most commonly used functions when building web services. The function itself is part of the JavaScript language and is implemented in V8 (the JavaScript engine that powers Node.js) as C++.

Fastify makes use of fast-json-stringify which as the name suggests is a more performant version of V8's JSON.stringify() function. It's written entirely in JavaScript, but manages to outperform JSON.stringify() in most cases by using schemas and new Function() in a safe manner.

To compare how the two perform against each other, we're using the benchmark module. The code for this benchmark is available from the fast-json-stringify GitHub repo .

Benchmarks show a considerable boost when serialising JavaScript object literals, over 2.5x the amount of operations per second . Similarly, it can perform twice as fast as JSON.stringify() when serialising short strings.

There's also a minor boost when serialising arrays. Performance with long strings is roughly on-par with JSON.stringify() , due to fast-json-stringify using JSON.stringify() to serialise strings more than 42 characters in length.

fast-json-stringify isn't a drop-in replacement for JSON.stringify() , but using it is simple:

const fastJSON = require('fast-json-stringify')</p><p>const schema = {

// the object type we want to stringify

type: 'object',</p><p> // define all object properties

properties: {

username: {

type: 'string'

},

fullName: {

type: 'string'

},

age: {

type: 'integer'

}

}

}</p><p>const stringify = fastJSON(schema)</p><p>console.log(stringify({

username: 'johndoe',

fullName: 'John Doe',

age: 30

})){ "username": "johndoe", "fullName": "John Doe", "age": 30 }How It Works

- First we outline a schema for our data. In this case, we define a simple object containing

username,fullNameandagekeys. - Calling

fastJSON(schema)generates and returns a new function capable of returning a JSON version of an object, based on the schema. stringify(object)takes an object and returns a JSON representation of it as a string.

Not only is fast-json-stringify more performant than JSON.stringify() in most cases, it also prevents data from accidentally leaking. If we were to stringify an object containing properties besides username , fullName , and age , they wouldn't appear in the JSON string.

The example below demonstrates how this adds an extra layer of security to your application:

console.log(stringify({

username: 'janedoe',

fullName: 'Jane Doe',

age: 31,

occupation: 'Programmer',

socialMedia: {

twitter: "Jane2017P",

github: "Jane2017P"

},

securityNumber: "00000E"

})){ "username": "janedoe", "fullName": "Jane Doe", "age": 31 }fast-json-stringify supports additional features such as setting required fields, pattern properties, and 64-bit integers. Check it out on GitHub to find out more.

The Server Lifecycle

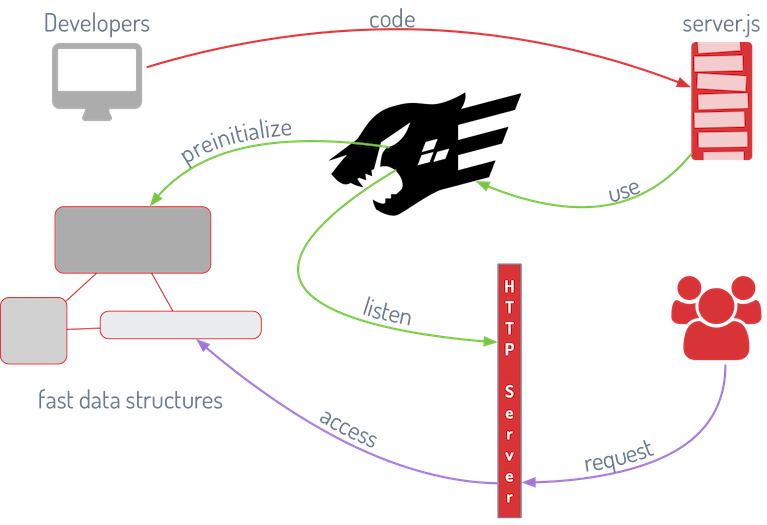

The lifecycle of most web frameworks is similar: the server starts, route handlers are registered, and the server listens for requests and calls the appropriate function to handle them. Fastify behaves slightly differently to gain extra performance.

When the server is started, Fastify carries out a preinitialisation stage. This stage performs a number of optimisations - JSON schemas are processed with fast-json-stringify , and handler functions are optimised with reusify.

Building with Fastify

Often times, writing libraries and frameworks with the aim of maximising performance leads to a reduction in readability, good API design, or features. Fastify's feature set resembles a combination of hapi and Express, and exposes an API that was built with developer happiness in mind.

Here's a list of Fastify's feature set in comparison with Express and hapi:

| Express | hapi | Fastify | |

|---|---|---|---|

| Router | **** | ||

| Middleware | **** | ||

| Plugins | **** | ||

| Validation | **** | ||

| Hooks | **** | ||

| Decorators | **** | ||

| Logging | **** | ||

async/await

|

**** | ||

| Requests/sec | 20,690 | 19,824 | 31,319 |

To start using the framework, we need to install Fastify. Version 0.35.6 is the latest version as of this tutorial, so we'll go ahead and grab it via npm:

npm install fastify@0.35.6Below is a simple server written using Fastify. It has a single GET / route which sends a JSON object to the client. (Note: this example doesn't take advantage of fast-json-stringify . We'll take a look at that in a bit!)

// Instantiate a Fastify server

const fastify = require('fastify')()</p><p>// Declare a route

fastify.get('/', function (request, reply) {

reply.send({ hello: 'world' })

})</p><p>// Start the server

fastify.listen(3000, function (err) {

if (err) throw err</p><p> let port = fastify.server.address().port

console.log(`Server listening: ${port}`)

})Fastify also supports async / await . The above route could be simplified into the following:

let simpleHandler = async (request, reply) => ({ hello: 'world' })</p><p>fastify.get('/simple-async', simpleHandler)If we were to wait for external I/O such as a database response, we could use the async / await syntax to simplify our business logic.

let awaitHandler = async function (request, reply) {

// grab details from a database

let user = await users.get('sigkell')

return user

}</p><p>fastify.get('/async-await', awaitHandler)Making Use of fast-json-stringify

The examples above don't take advantage of fast-json-stringify to serialise response objects. To get the additional performance boost, we need to set the schema in our route's options object.

// This object contains additional config for our route

const options = {

schema: {

response: {

200: {

type: 'object',

properties: {

hello: {

type: 'string'

}

}

}

}

}

}</p><p>// Set our options object as the second argument

fastify.get('/fastjson', options, function (request, reply) {

reply.send({ hello: 'world' })

})Conclusion

Fastify is a great choice to build an application where performance is a critical concern. Compared with some of the more established frameworks, Fastify is capable of processing over 1.5 times the amount of requests per second when dealing with JSON data, maximising the price/performance ratio of your servers.

To evaluate how Fastify could enhance the performance of your application, take a look at the documentation available on GitHub and take it for a test drive.

If you're interested in learning how Fastify came about, and about HTTP server performance in general, be sure to check out Matteo Collina's Take Your HTTP Server to Ludicrous Speed below!

Insight, imagination and expertly engineered solutions to accelerate and sustain progress.

Contact